This post is part of a series where I try to classify online review text in more and more concrete ways. Right now I'm training a classifier to accurately classify one (bad) vs five (good) starred reviews. In the last post I had done some initial training and testing of an NLTK Bayesian classifier. In this post I want to see if I can improve the accuracy score of my classifier by getting smarter about which features I include.

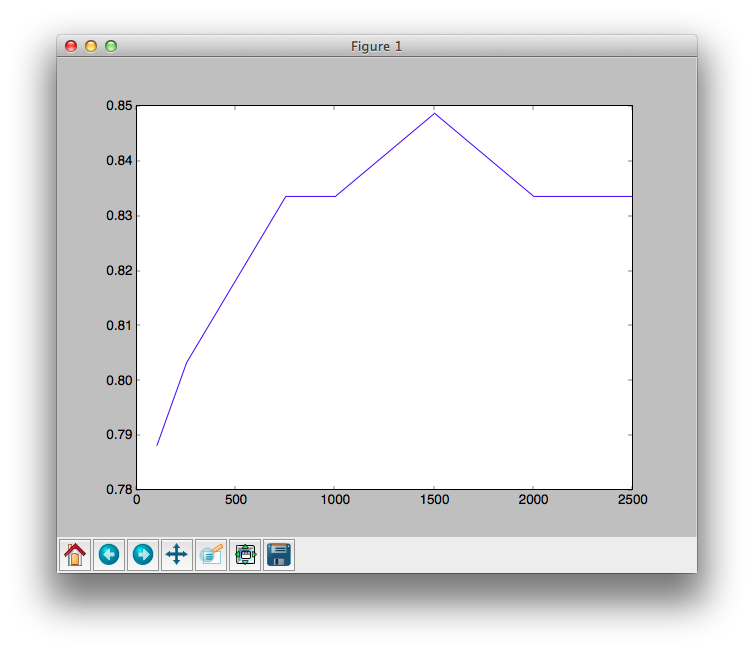

In the last post I had experimented with varying the quantity of feature set, and had found that while encoding more features into a classifier during training helps accuracy, there is an eventual accuracy ceiling. My feature set came from taking the top N words from a frequency distribution of all words in the reviews text. Here is what the accuracy curve looks like:

One other way to improve accuracy is to address the 'quality' of the feature set by looking at features not only in terms of their frequency across the training corpus, but looking at their relative frequencies across classifications.

In the review classification done so far, individual words are the features. I'm going to try to 'tune' feature sets in several different ways -- I have no idea if these will work, but they seem reasonable. I'm going to call these attempts hypotheses, because my goal is to prove them to be true or false, with relatively minimal effort.

P(review rating | features) = P(features, review rating)

In the equation above, the P(features, review rating) term is the multiplied probabilities of each P(feature, review rating). If I'm looking for a higher overall probability that a document is one star over five star or vice versa, having per feature probabilities that are similar for one star or five star reviews means that my overall probabilities for one and five star will be close to equal, which could tip classification results 'the other way' and increase my error rate.

I ended up recoding the building of the training and test data so that the data sets being built had a more even distribution of ratings across them:

When I built up the training data using this method, the sets were returned in the buckets[] array:

Each training set in this list is actually an array of (textBag, rating) tuples:

buckets = [[(bagOfText,rating)...],[..]]

I want to get frequency distributions of common terms from one and five star reviews in the training data, so that I can find terms with a high probability differential:

# get common terms and frequency differentials

allWords1 = [w for (textBag,rating) in buckets['training'] for w in textBag if rating == 1]

commonTerms = [w for w in fd1.keys() if w in fd5.keys()]

# now get frequency differentials

commonTermFreqs = [(w,fd1.freq(w),fd5.freq(w),abs(fd1.freq(w)-fd5.freq(w)))

for w in commonTerms]

commonTermFreqs.sort(key=itemgetter(3),reverse=True)

Now we've got common terms, sorted by their absolute differential between frequency distributions in 1 and 5 star reviews.

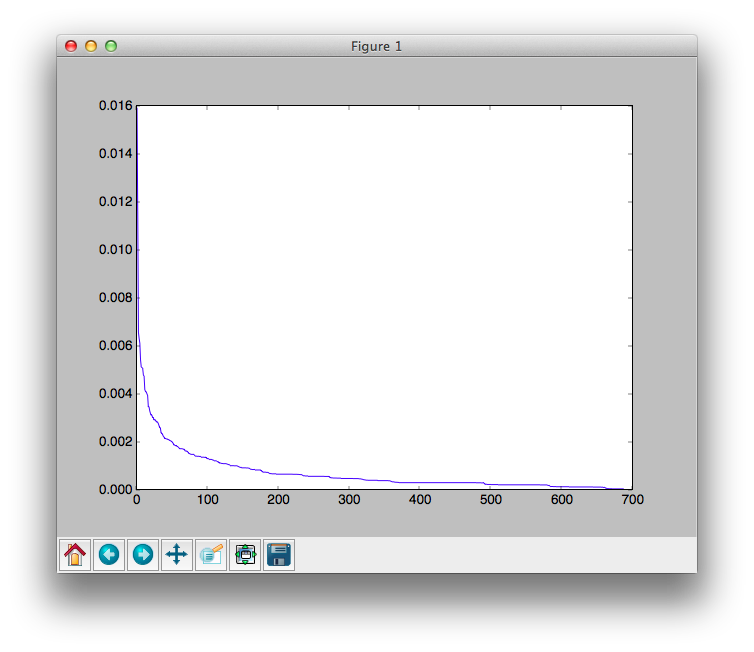

if I plot this distribution:

freqdiffs = [diff for (a,b,c,diff) in commonTermFreqs]

plt.plot(freqdiffs)

plt.show()

I can see that it falls off sharply:

This looks like a Zipfian distribution: "given some corpus of natural language utterances, the frequency of any word is inversely proportional to its rank in the frequency table. Thus the most frequent word will occur approximately twice as often as the second most frequent word, three times as often as the third most frequent word..."

The shape of this distribution implies that only a small subset of the terms actually have a frequency differential that really 'matters' in the hypothesis -- all terms aren't needed. I can start arbitrarily by keeping all terms with a frequency differential > 0.001 to quickly test the hypothesis. That leaves 131 of the original 688 common terms.

Note that in getting and filtering common terms, I have not retained the terms that very strongly signal one review rating or another: those would be the terms that exist only in one review rating corpus or another. Note that even though those terms do not exist in one or the other review corpus, and that would make the calculation go to zero, the non existent terms are 'smoothed out' by including them in the other corpus and adding a very small value to the frequency of all terms in that corpus, which guarantees that there are no terms with a zero frequency, and the Bayesian calculation won't zero out.

I would need to add those terms into the set of terms that we filter by.

The full set of filtered terms is comprised of both uncommon and filtered common words:

filterTerms = [w for (w,x,y,diff) in commonTermFreqs if diff > 0.001]

And I use those words as features identified at encoding time:

In the last post I had experimented with varying the quantity of feature set, and had found that while encoding more features into a classifier during training helps accuracy, there is an eventual accuracy ceiling. My feature set came from taking the top N words from a frequency distribution of all words in the reviews text. Here is what the accuracy curve looks like:

One other way to improve accuracy is to address the 'quality' of the feature set by looking at features not only in terms of their frequency across the training corpus, but looking at their relative frequencies across classifications.

In the review classification done so far, individual words are the features. I'm going to try to 'tune' feature sets in several different ways -- I have no idea if these will work, but they seem reasonable. I'm going to call these attempts hypotheses, because my goal is to prove them to be true or false, with relatively minimal effort.

Hypothesis 1: Throw away features with a low 'frequency differential'

My hypothesis is that there are features that have a much higher chance of being in a negative review than a positive review, and vice versa. Those are the features that we want to keep. Other features are ones that have approximately the same chance of being in either type of review (positive or negative).P(review rating | features) = P(features, review rating)

In the equation above, the P(features, review rating) term is the multiplied probabilities of each P(feature, review rating). If I'm looking for a higher overall probability that a document is one star over five star or vice versa, having per feature probabilities that are similar for one star or five star reviews means that my overall probabilities for one and five star will be close to equal, which could tip classification results 'the other way' and increase my error rate.

I can validate this hypothesis by filtering out those low probability differential features and keeping the ones that have a high probability differential: a high difference between P(feature, review rating) for {1 star, 5 star} ratings.

Building The Feature Set

I had trained and tested the classifier by taking input data, splitting it into a test and a training set, then training and testing. I will recreate that process now to get the raw data so that I can 'remove' common terms with low probability:

sjr = SiteJabberReviews(pageUrl,filename)

sjr.load()

asd = AnalyzeSiteData()

I ended up recoding the building of the training and test data so that the data sets being built had a more even distribution of ratings across them:

def generateLearningSetsFromReviews(self,reviews, ratings,buckets):

# check to see that percentages sum to 1

# get collated sets of reviews by rating.

val = 0.0

for pct in buckets.values():

val += pct

if val > 1.0:

raise 'percentage values must be floats and must sum to 1.0'

reviewsByRating = defaultdict(list)

for reviewSet in reviews:

for rating in ratings:

reviewList = [(self.textBagFromRawText(review.text), rating)

for review in reviewSet.reviewsByRating[rating]]

reviewsByRating[rating].extend(reviewList)

random.shuffle(reviewsByRating[rating]) # mix up reviews from different reviewSets

# break collated sets across all ratings into percentage buckets

learningSets = defaultdict(list)

for rating in ratings:

sz = len(reviewsByRating[rating])

lastidx = 0

for (bucketName, pct) in buckets.items():

idx=lastidx + int(pct*sz)

learningSets[bucketName].extend(reviewsByRating[rating][lastidx:idx])

lastidx = idx

return learningSets

When I built up the training data using this method, the sets were returned in the buckets[] array:

buckets = asd.generateLearningSetsFromReviews([sjr],[1,5],{'training': 0.8,'test':0.2})

buckets = [[(bagOfText,rating)...],[..]]

I want to get frequency distributions of common terms from one and five star reviews in the training data, so that I can find terms with a high probability differential:

# get common terms and frequency differentials

allWords1 = [w for (textBag,rating) in buckets['training'] for w in textBag if rating == 1]

fd1 = FreqDist(allWords1)

allWords5 = [w for (textBag,rating) in buckets['training'] for w in textBag if rating == 5]

fd5 = FreqDist(allWords5)

commonTerms = [w for w in fd1.keys() if w in fd5.keys()]

# now get frequency differentials

commonTermFreqs = [(w,fd1.freq(w),fd5.freq(w),abs(fd1.freq(w)-fd5.freq(w)))

for w in commonTerms]

commonTermFreqs.sort(key=itemgetter(3),reverse=True)

Now we've got common terms, sorted by their absolute differential between frequency distributions in 1 and 5 star reviews.

if I plot this distribution:

freqdiffs = [diff for (a,b,c,diff) in commonTermFreqs]

plt.plot(freqdiffs)

plt.show()

I can see that it falls off sharply:

This looks like a Zipfian distribution: "given some corpus of natural language utterances, the frequency of any word is inversely proportional to its rank in the frequency table. Thus the most frequent word will occur approximately twice as often as the second most frequent word, three times as often as the third most frequent word..."

The shape of this distribution implies that only a small subset of the terms actually have a frequency differential that really 'matters' in the hypothesis -- all terms aren't needed. I can start arbitrarily by keeping all terms with a frequency differential > 0.001 to quickly test the hypothesis. That leaves 131 of the original 688 common terms.

Note that in getting and filtering common terms, I have not retained the terms that very strongly signal one review rating or another: those would be the terms that exist only in one review rating corpus or another. Note that even though those terms do not exist in one or the other review corpus, and that would make the calculation go to zero, the non existent terms are 'smoothed out' by including them in the other corpus and adding a very small value to the frequency of all terms in that corpus, which guarantees that there are no terms with a zero frequency, and the Bayesian calculation won't zero out.

I would need to add those terms into the set of terms that we filter by.

The full set of filtered terms is comprised of both uncommon and filtered common words:

filterTerms = [w for (w,x,y,diff) in commonTermFreqs if diff > 0.001]

fd1Only = [w for w in fd1.keys() if w not in fd5.keys]

filterTerms.extend(fd1Only)

fd5Only = [w for w in fd5.keys() if w not in fd1.keys]

filterTerms.extend(fd5Only)

defaultWordSet = set(filterTerms) # rename so I dont have to rewrite the encoding method

And I use those words as features identified at encoding time:

def emitDefaultFeatures(tokenizedText):

'''

@param tokenizedText: an array of text features

@return: a feature map from that text.

'''

tokenizedTextSet = set(tokenizedText)

featureSet = {}

for text in defaultWordSet:

featureSet['contains:%s'%text] = text in tokenizedTextSet

return featureSet

Testing The Hypothesis

Now I can train the classifier: asd.encodeData() takes care of encoding features from the training and test sets by calling emitDefaultFeatures() for each review.

encodedTrainSet = asd.encodeData(rawTrainingSetData,emitDefaultFeatures )

classifier = nltk.NaiveBayesClassifier.train(encodedTrainSet)

encodedTestSet = asd.encodeData(rawTestSetData, emitDefaultFeatures)

print nltk.classify.accuracy(classifier, encodedTestSet)

And I get an accuracy of 0.83, the same accuracy I got with no manipulation of the feature set, which is 0.02 less than my optimal accuracy. Whoops.

While the numbers initially looked 'decent', deeper analysis shows that my classifier completely mis-classified positive reviews. In the future I'll do error analysis of classifiers before trying to theorize about what could make the classifier more accurate.

Looking closer at the data, the data set had 55 total positive reviews and 273 total negative reviews. In other words only 20% of my data was actually positive review data.

I had originally scraped only one reviewed site for data, but now I think I'm going to need to scrape more sites to get a more representative set of positive review data so that the classifier has more training examples.

In my next post I'm going to try to collect a more representative 'set' of data, and also take a slightly different approach to validating my classifier. I'm going to do error analysis up front and attempt to correct my classifier based on the errors I see, then test the classifier against new test data -- testing a fixed classifier against the data I used to fix it will give me a false sense of accuracy, because the test data used to do error analysis has in effect become training data.

Detailed Error Analysis

There is one other step I can take to understand the accuracy of the classifier, and that is to analyze the errors made on the test set. If I know how I mis-classified the data, that can help me affect the classifier.

shouldBeClassed1 = []

shouldBeClassed5 = []

for (textbag, rating) in buckets['test']:

testRating = classifier.classify(emitDefaultFeatures(textbag))

if testRating != rating:

if rating == 1:

shouldBeClassed1.append(textbag)

else:

shouldBeClassed5.append(textbag)

A quick check on the error arrays shows me that I've only made mistakes on the reviews that should be classified as positive:

>>>> print len(shouldBeClassed5.append(textbag))

11

Wait a minute. That number looks familiar. Let me review the raw data again:

>>>>print len(sjr.reviewsByRating[5])

55

>>>>print int(0.2*len(sjr.reviewsByRating[5]))

11

This data shows that I mis-classified all 11 positive reviews in the test data, because my error analysis showed that I had eleven mis-classified positive reviews, and I only had 11 positive reviews in the teset set based on an 80% training/20% testing split.

A quick reversal to the original test method (that collected features from a FreqDist of all terms in the training data) shows that I mis-classified all 11 positive reviews as well.

Summary

This was one attempt to improve classifier accuracy by trying something reasonable with the feature set -- removing features whose probability differential across 1 star and 5 star review corpuses was very small.While the numbers initially looked 'decent', deeper analysis shows that my classifier completely mis-classified positive reviews. In the future I'll do error analysis of classifiers before trying to theorize about what could make the classifier more accurate.

Looking closer at the data, the data set had 55 total positive reviews and 273 total negative reviews. In other words only 20% of my data was actually positive review data.

I had originally scraped only one reviewed site for data, but now I think I'm going to need to scrape more sites to get a more representative set of positive review data so that the classifier has more training examples.

In my next post I'm going to try to collect a more representative 'set' of data, and also take a slightly different approach to validating my classifier. I'm going to do error analysis up front and attempt to correct my classifier based on the errors I see, then test the classifier against new test data -- testing a fixed classifier against the data I used to fix it will give me a false sense of accuracy, because the test data used to do error analysis has in effect become training data.