Motivation:

A colleague of mine was bemoaning the lack of non trivial mapreduce examples out there, and wanted to teach a class on how to apply mapreduce to "something other than word count". This sounded like a great idea to me, so I volunteered to provide a mapreduce implementation of Conditional Probability (CP) calculations from user clickstream data.

CP calculation is one of many collaborative filtering techniques used to deliver personalized product/content recommendations to users at sites like Amazon, Pandora, Netflix, etc. Conditional Probability is the probability of one event happening once another has occurred. Doing this for page views can be used to determine which page views are correlated. Suggesting correlated pages to a user can greatly enhance their browsing and ultimately their purchasing experience.

For a web application, page correlation is possible to determine because user actions map to page views of products or content that are logged to the application servers. From those logs we can determine which pages are more likely to be viewed together by calculating potential correlations between all available pages. For any large scale website, this means that GB of data per day need to be processed to calculate CP and correlation-- applying map-reduce in this case allows large amounts of log data to be processed in parallel.

Implementing CP calculations via mapreduce is a great way to start to understand how collaborative filtering is done at scale -- it is initially non intuitive, but ends up being a great way to understand how mapreduce works. Of course, CP calculation is one part of a larger set of steps, one of which must be determining page correlation once CP has been calculated.

For me, it was necessary to understand Conditional Probability in order to correctly implement the CP calculation as well as understand how to use it to determine whether events are correlated. And that in turn requires a basic understanding of Probability. Note: my interpretation of basic probability concepts is solely mine, and I welcome all comments and corrections :)

Probability Overview:

The probability that an event occurs can be stated as P(A) = (total count of event A)/(total count of all events). For example, if I say there is a 5% probability that I will introduce a bug into our source code, that means that 5 out of every 100 commits I make contain bugs (people on my team would tend to round that number upward, but they only have anecdotal evidence so far:)

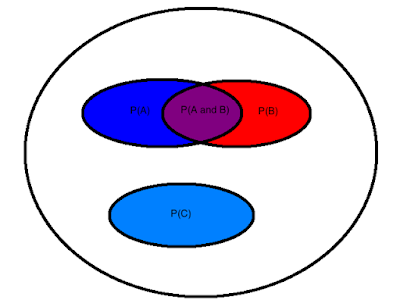

At this point it's useful to draw a picture. The Probability Space for a set of potential events is the representation of all possible events that can happen, with their assigned probabilities, expressed as P(Event).

In the Venn Diagram above, the intersection of P(A) and P(B) is shown in purple, and is expressed as A ∩ B. This is the probability space where both A and B occur, aka the intersection of P(A) and P(B). The complete space covered by A and B is expressed as A ∪ B aka the union of P(A) and P(B).

If two events are mutually exclusive, that means they can never occur together. In the above example, Event C is mutually exclusive with both A and B.

Independent events are never mutually exclusive events. Since independent events by definition may occur together, they cannot be mutually exclusive. For example: I can wear a blue shirt and/or commit a bug. These two events may be independent. They are not mutually exclusive. I cannot wear a blue shirt and wear no shirt at the same time. Those two events are mutually exclusive.

So events that can co-occur may be independent -- in other words they may not be correlated. If A and B are independent, then the probability that one happens does not depend on the probability that the other. They may also be dependent -- B may occur more frequently after A occurs. I'll discuss determining independence more after providing a definition of Conditional Probability.

Conditional Probability, Defined and Applied

Conditional Probability, the probability of B occurring when A has occurred, is written as P(B | A). From the Venn Diagram above, we are explicitly interested in the B ∩ A area -- the intersection of A and B -- but only when A occurs. This can be expressed as

P(B | A) = P(B ∩ A)/P(A)

A Venn Diagram doesn't really show the 'in between state' of A (occurred) and B (not occurred) too well. A better way to visualize CP is to use a Probability Tree. In this tree, each branch represents a set of probabilities for a single event. Events are represented as nodes. Subsequent events are sub branched from the original event nodes. The sum of each set of branches that share a common origin node must be 1 (or 100%).

The tree above is incomplete because it doesn't assign actual probabilities to the states. Possible probabilities are shown below.

To get the actual probability of an event, you traverse the tree and multiply the values of branches traversed. This works because of the previous definitions. Remember that B occurring given A is represented as B ∩ A. From the equation above,

P(B | A) = P(B ∩ A)/P(A) ->

B ∩ A = P(A)*P(B | A)

P(A) = 25%, P(B | A) = 33%, P(B∩A) = 25%*33% = 8.25%

The probability tree above defined an event space with two events that co-occur. One thing it doesn't give us is P(B). But we can get P(B), assuming that A is the only other event in the space, by adding up end states. B can be expressed as P(B∩A) + P(B∩A'). If we traverse A->B to get P(B∩A), we get P(A)*P(B | A). If we traverse A'->B to get P(B∩A'), we get P(A')*P(B | A'). The sum of these states is the total probability of B happening in this event space:

P(B) = P(A)*P(B | A) + P(A')*P(B | A')

= 25%*33% +75%*55% = 8.25% +41.25% = 49.5%.

Conditional Probability and Independent Events

All of that is neat (really!), but how do we tie it back to providing real value to our site? We do this by realizing how CP helps determine whether an event is independent or not. If A and B are truly independent, then

P(B | A) = P(B).

In other words, B is independent from A when B happens regardless of whether A happens. An example of this is two consecutive rolls of a dice. The probability of getting a 1 on the second roll is not affected by the value obtained from the first roll.

Contrast this with two dependent variables. If I had 5 pairs of black socks in a drawer (B), and 5 pairs of white socks (W), and wanted to calculate the probability of getting a black pair P(B) when pulling a pair out of my drawer, it would originally be 50%, but would change because there would be one pair less of black or white socks after my first pull.

If P(B | A) = P(B), and

P(B | A) = P(B ∩ A)/P(A), then for independent events,

P(B) = P(B ∩ A)/P(A) because P(B | A) = P(B) and

P(B)*P(A) = P(B ∩ A)

So if B is independent from A, the probability of B happening after A is the same as the probability of B multiplied by the probability of A.

If P(B ∩ A) > P(B)*P(A), the two are positively correlated -- there is a greater possibility of B occurring when A occurs than the two occurring independently.

If P(B ∩ A) < P(B)*P(A), the two are negatively correlated -- there is less of a possibility of B occurring when A occurs than the two occurring independently.

Variable (in)dependence is something to consider when recommending products or content. If we recommend content that we think is correlated when it is (a) independent or (b)negatively correlated, that recommendation may drown out other recommendations that are actually positively correlated, but whose probabilities are much less than the independent or negatively correlated item.

A colleague of mine was bemoaning the lack of non trivial mapreduce examples out there, and wanted to teach a class on how to apply mapreduce to "something other than word count". This sounded like a great idea to me, so I volunteered to provide a mapreduce implementation of Conditional Probability (CP) calculations from user clickstream data.

CP calculation is one of many collaborative filtering techniques used to deliver personalized product/content recommendations to users at sites like Amazon, Pandora, Netflix, etc. Conditional Probability is the probability of one event happening once another has occurred. Doing this for page views can be used to determine which page views are correlated. Suggesting correlated pages to a user can greatly enhance their browsing and ultimately their purchasing experience.

For a web application, page correlation is possible to determine because user actions map to page views of products or content that are logged to the application servers. From those logs we can determine which pages are more likely to be viewed together by calculating potential correlations between all available pages. For any large scale website, this means that GB of data per day need to be processed to calculate CP and correlation-- applying map-reduce in this case allows large amounts of log data to be processed in parallel.

Implementing CP calculations via mapreduce is a great way to start to understand how collaborative filtering is done at scale -- it is initially non intuitive, but ends up being a great way to understand how mapreduce works. Of course, CP calculation is one part of a larger set of steps, one of which must be determining page correlation once CP has been calculated.

For me, it was necessary to understand Conditional Probability in order to correctly implement the CP calculation as well as understand how to use it to determine whether events are correlated. And that in turn requires a basic understanding of Probability. Note: my interpretation of basic probability concepts is solely mine, and I welcome all comments and corrections :)

Probability Overview:

The probability that an event occurs can be stated as P(A) = (total count of event A)/(total count of all events). For example, if I say there is a 5% probability that I will introduce a bug into our source code, that means that 5 out of every 100 commits I make contain bugs (people on my team would tend to round that number upward, but they only have anecdotal evidence so far:)

At this point it's useful to draw a picture. The Probability Space for a set of potential events is the representation of all possible events that can happen, with their assigned probabilities, expressed as P(Event).

In the Venn Diagram above, the intersection of P(A) and P(B) is shown in purple, and is expressed as A ∩ B. This is the probability space where both A and B occur, aka the intersection of P(A) and P(B). The complete space covered by A and B is expressed as A ∪ B aka the union of P(A) and P(B).

Independent events are never mutually exclusive events. Since independent events by definition may occur together, they cannot be mutually exclusive. For example: I can wear a blue shirt and/or commit a bug. These two events may be independent. They are not mutually exclusive. I cannot wear a blue shirt and wear no shirt at the same time. Those two events are mutually exclusive.

So events that can co-occur may be independent -- in other words they may not be correlated. If A and B are independent, then the probability that one happens does not depend on the probability that the other. They may also be dependent -- B may occur more frequently after A occurs. I'll discuss determining independence more after providing a definition of Conditional Probability.

Conditional Probability, Defined and Applied

Conditional Probability, the probability of B occurring when A has occurred, is written as P(B | A). From the Venn Diagram above, we are explicitly interested in the B ∩ A area -- the intersection of A and B -- but only when A occurs. This can be expressed as

P(B | A) = P(B ∩ A)/P(A)

A Venn Diagram doesn't really show the 'in between state' of A (occurred) and B (not occurred) too well. A better way to visualize CP is to use a Probability Tree. In this tree, each branch represents a set of probabilities for a single event. Events are represented as nodes. Subsequent events are sub branched from the original event nodes. The sum of each set of branches that share a common origin node must be 1 (or 100%).

In the tree above, I'm using some new notation. A' is the complement of A, which means that P(A') is the probability that A will not happen. The complementary probability is accounted for in a probability tree where it is 'implied' in a venn diagram.

This tree shows the possible state map for A and B, in a specific order. If A occurs, there is a probability that B will or will not occur, noted by the conditional probabilities. The same set of possible B states is available if A doesn't occur. The probability tree makes it clear that conditional probabilities represent the state where A (or A') have occurred.The tree above is incomplete because it doesn't assign actual probabilities to the states. Possible probabilities are shown below.

P(B | A) = P(B ∩ A)/P(A) ->

B ∩ A = P(A)*P(B | A)

P(A) = 25%, P(B | A) = 33%, P(B∩A) = 25%*33% = 8.25%

P(B) = P(A)*P(B | A) + P(A')*P(B | A')

= 25%*33% +75%*55% = 8.25% +41.25% = 49.5%.

Conditional Probability and Independent Events

All of that is neat (really!), but how do we tie it back to providing real value to our site? We do this by realizing how CP helps determine whether an event is independent or not. If A and B are truly independent, then

P(B | A) = P(B).

In other words, B is independent from A when B happens regardless of whether A happens. An example of this is two consecutive rolls of a dice. The probability of getting a 1 on the second roll is not affected by the value obtained from the first roll.

Contrast this with two dependent variables. If I had 5 pairs of black socks in a drawer (B), and 5 pairs of white socks (W), and wanted to calculate the probability of getting a black pair P(B) when pulling a pair out of my drawer, it would originally be 50%, but would change because there would be one pair less of black or white socks after my first pull.

If P(B | A) = P(B), and

P(B | A) = P(B ∩ A)/P(A), then for independent events,

P(B) = P(B ∩ A)/P(A) because P(B | A) = P(B) and

P(B)*P(A) = P(B ∩ A)

So if B is independent from A, the probability of B happening after A is the same as the probability of B multiplied by the probability of A.

If P(B ∩ A) > P(B)*P(A), the two are positively correlated -- there is a greater possibility of B occurring when A occurs than the two occurring independently.

If P(B ∩ A) < P(B)*P(A), the two are negatively correlated -- there is less of a possibility of B occurring when A occurs than the two occurring independently.

Variable (in)dependence is something to consider when recommending products or content. If we recommend content that we think is correlated when it is (a) independent or (b)negatively correlated, that recommendation may drown out other recommendations that are actually positively correlated, but whose probabilities are much less than the independent or negatively correlated item.

Conclusion (So Far)

As discussed above, calculating CP is part of a larger effort to determine whether pages are correlated, independent, or anti-correlated. To do this for two events A and B it is necessary to know P(B | A), P(B), and P(A). In my next post I'll provide a high level algorithm to get these numbers and determine correlated page views from a generic serve log containing pages viewed by user ID.